Q&A with Professor Julia Hirschberg

Professor Julia Hirschberg has been a pioneer in natural language processing, a subarea of artificial intelligence that uses computers to analyze written or spoken language. As chair of the Computer Science Department at Columbia Engineering, she leads a team of researchers, many of whom also work at the nexus of linguistics and computer science. Hirschberg, who was elected this spring to the National Academy of Engineering for her numerous contributions to speech synthesis and speech analysis, shared her perspective on natural language processing (NLP) and artificial intelligence (AI).

Julia Hirschberg (Photo by Jeffrey Schifman)

Q. The National Academy of Engineering cited your contributions to the "use of prosody in text-to-speech and spoken dialogue systems and to audio browsing and retrieval." Tell us a bit about your research.

A. At Bell Labs, I worked on text-to-speech (TTS) synthesis—producing natural, human-sounding speech from text input. TTS is a critical component of spoken dialogue systems like Siri—it lets systems talk with users. Prosody is a major component of naturalness: when people speak, they rarely speak in a monotone. They use falling pitch to indicate statements, rising pitch for yes/no questions, and a variety of other contours to express uncertainty, incredulity, surprise, and other types of information.

Later, when I became head of the Human Computer Interface Research Department and moved to AT&T Labs, I led a group of speech and HCI (human-computer interaction) researchers who developed interfaces to voicemail messages, allowing people to search their voicemail through automatic speech recognition (ASR) transcripts. We provided information in an email-like interface identifying the caller and letting recipients read their messages. We also were able to label messages as personal or business with good accuracy based on training on many voicemail messages our colleagues made available. This system, called SCANMail, was the first to provide these capabilities but was hard to scale due to the ASR-intensive nature of the problem and the lack of sufficient server capacity at the time.

Q. Your research in speech analysis uses machine learning to help experts identify deceptive speech and even to assess sentiment and emotion across languages and cultures. Tell us about this research.

A. We began to study deceptive speech shortly after 9/11 when the Department of Homeland Security decided to fund a multimodal project to identify characteristics of deceptive persons from speech, face, and gesture. We collected what was then the largest cleanly recorded corpus of deceptive and non-deceptive speech and built classifiers that distinguished between truth and lie with 70 percent accuracy. Human raters of the same data had only 58 percent accuracy. We subsequently received AFOSR (Air Force Office of Scientific Research) funding to collect a much larger, multicultural corpus with native speakers of English and Chinese. We are using gender, ethnicity, and personality traits, as well as acoustic and lexical features, to classify truth versus lie.

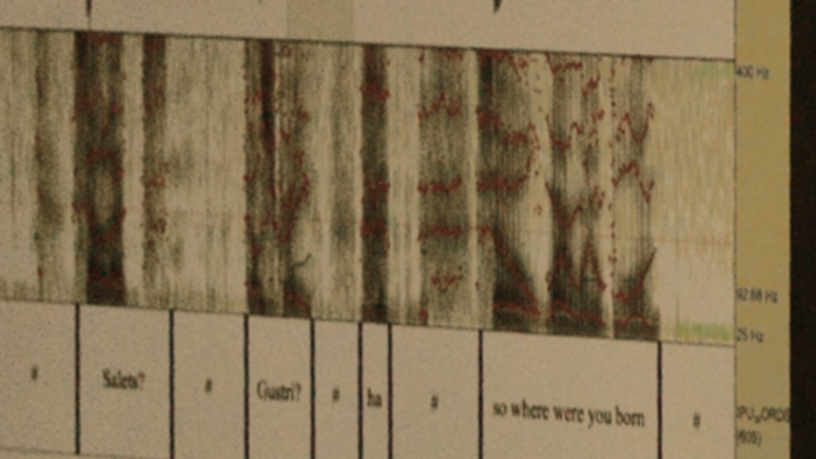

An example of the waveform of an utterance, along with its spectrogram—a visual representation of the power at each frequency—and its phonetic and orthographic transcriptions. (Photo by Jeffrey Schifman)

Q. You also have a doctorate in history. How did you initially get into the field of computer science, and linguistics in particular?

A. I fell in love with CS (computer science) when finishing my PhD thesis in 16th-century Mexican social history while also teaching history at Smith College. I was trying to examine networks of settlers who founded a town in 1531–32, which is now Puebla de los Ángeles, a large Mexican city. I was looking at the social relations between settlers and how that related to things like land ownership and commercial transactions. A friend in CS said, “Julia, this is an AI problem.” In fact, I was doing some form of social network analysis, although none of us knew that at the time. Since historians didn’t have any way of funding such an effort at the time, I ended up learning to program. Eventually, I decided to get a master’s in CS at Penn with a database focus. However, I took a wonderful course in NLP and decided to get a PhD in computational linguistics instead.

My thesis was in computational approaches to interpreting conversational implicature—information listeners infer but which is not explicitly said by speakers. Such behavior is important to model to understand human question-answering. At one point, I was giving a talk about my work, and a linguistics friend noticed that I was using a particular intonational contour in producing my examples. He and I discovered that this was a well-known contour whose meaning was controversial, so we both began to study it. We eventually published a paper in a top linguistics journal and were invited to visit Bell Labs to discuss the work. We drove back saying, “Wow, that would be a wonderful place to work.” I ended up there, with my friend as a longtime collaborator.

In Julia Hirschberg’s lab, students analyze human speech and design speech technologies. Graduate students Victor Soto Martinez (left) and Gideon Mendels work with the lab’s double-walled soundproof booth. (Photo by Jeffrey Schifman)

Q. Where do you see the growth potential for research into natural language processing?

A. NLP is advancing fundamentally in new approaches to modeling semantics—aka “meaning.” One major development is the increasingly interdisciplinary nature of NLP work in collaboration with the humanities, social science, and journalism. New machine learning techniques are being used to build classifiers from larger and larger amounts of data, which makes NLP of increasing interest for business and medical applications. Human-robot interaction is also of increasing interest and beginning to involve speech as well as language researchers. In the medical domain, speech analysis is increasingly being studied to identify medical conditions such as depression and autism. Both speech and text analysis are being used in analyses of political candidates and movement leaders, to determine their ability to attract followers and win elections.

Q. How has your department grown in the past few years?

A. In 2012, when I became chair, we had 38 faculty. Today, we have 49, with another faculty member already coming in the fall, and multiple searches for new faculty in AI and programming languages. We’ve grown in machine learning, security, theory, systems, and HCI, all areas where we already had strength but now we have much more.

Q. What is the student interest in natural language processing and artificial intelligence?

A. Students find computer science as fascinating as I did when I went from history to computer science. No matter what your major, take a CS course and you will find something fascinating about learning how to apply computational tools to problems you’ve encountered but may not even know could be solved. CS departments everywhere are experiencing a huge growth in student interest. For example, Columbia saw a nearly sixfold increase in the number of undergraduate computer science majors over the past 10 years, and enrollments in CS classes almost tripled over that period, reaching more than 9,000.