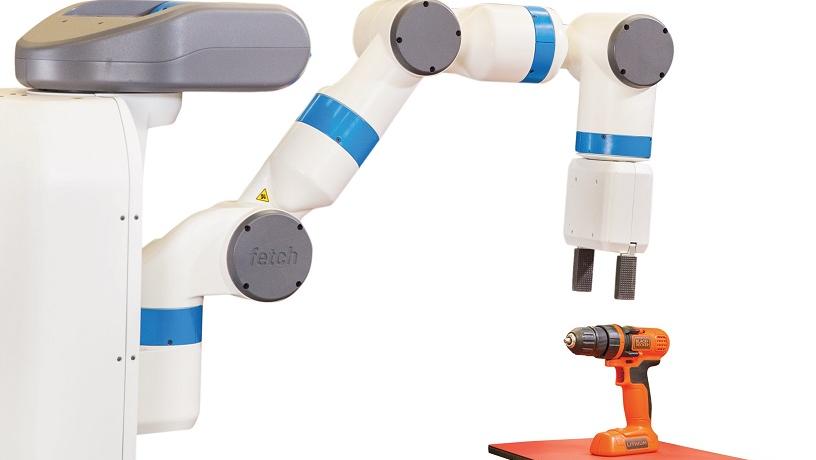

Creating Robotic Assistants for Those in Need

In Computer Science Professor Peter Allen’s lab, robots take their cues from electrical signals emitted by a single muscle twitch or by the brain to perform multiple tasks—such as navigating through a cluttered room in order to fetch a can of potato chips. Pulling off such an intricate series of movements requires the complex integration of artificial intelligence, signal processing and control, robotic path planning, and robotic grasping—the fruit of literally thousands of experimental iterations performed by Allen’s researchers. To accomplish this work, the team devised a powerful new brain-muscle computer interface (BMCI) that can virtually recreate a remote environment, along with a grasping technology that, linked together, can enable a user to direct a robot solely through intent.

By integrating object recognition, path planning, and online grasp planning with feedback, these robots can employ discrete multiphase grasping sequences designed to pick up different objects.

A demonstration created by Allen’s team illustrates the impressive scope of the project. In it, a tetraplegic patient in California, outfitted with a single, small sensor that picks up surface electromyographic (sEMG) signals, views on a computer a virtual reality interface built by Allen’s Robotics Group. Simply by twitching a muscle behind his ear, he proceeds to direct a robot arm and hand located in the Morningside Heights lab to selectively lift various objects from a table. The researchers’ goal is an interface so well designed that a nonexpert user can apply intuition to the grasping task, within a system that functions smoothly despite sensor noise and other distractions. “The innovation here,” Allen says, “is the ability to recreate with virtual reality the remote environment and simplify the grasping task sufficiently to enable the subject to use the interface to control the robot.”

Assisting severely impaired individuals with robotic grasping systems is an emerging and potentially transformative field. BMCIs enable users to choose from a computer-modeled 3D selection of ways to grasp the desired object. The users then indicate—via wiggling their ears, for instance—the correct grasping position. The sEMG sensor, placed noninvasively on the surface of their skin, picks up a single sEMG signal at a muscle site that the user has learned to activate—often just behind an ear—which triggers a multiphase grasping sequence that comprises object recognition, integrated preplanning, and online grasp planning with feedback. The sequence demonstrated by Allen’s team already functions well enough to enable a user to plan and achieve grasps in nearly real time.

Allen’s group is now developing a grasping system that relies on brain signals and pupillary response rather than on a single muscle twitch to signify a user’s intent. These neurological signals derive from a subliminal reaction to visual stimuli, making it possible for an individual with limited expertise to operate the robot. The challenge for the interface in this scenario is to distinguish among the proliferation of brain signals emitted, says Allen.

In order to incorporate an additional level of augmented reality to the brain-computer interface (BCI), Allen is collaborating with Paul Sajda, professor of biomedical engineering, radiology, and electrical engineering on a tailored set of ocular augmented reality glasses. The glasses will provide an especially rich view of the surrounding environment, while the interface will enable certain teachable tasks to be off-loaded to a communicating computer and free the wearer to focus on other tasks. For instance, a soldier driving would be capable of simultaneously focusing on driving and scanning the roadside for explosives, which would be detected as objects of interest by subliminal brain signals.

In the future, such BCIs could also assist in complex task scenarios where humans and robots interact—tasks such as robotic telesurgery, where a surgeon could use her hands to manage one task while the computer directs a communicating robotic device to handle a related, learnable task, such as providing the best camera view for the procedure.