Sounds Promising

Multimodal AI and the problem of meaning

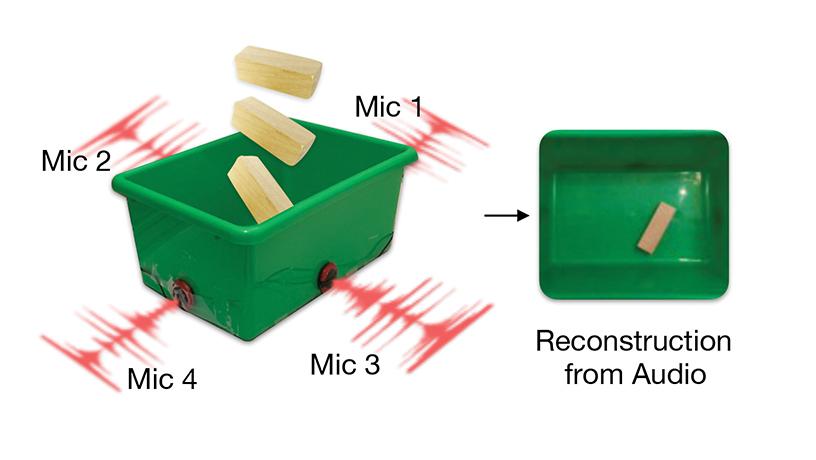

Diagram of the Boombox set up with output.

Carl M. Vondrick, assistant professor of computer science.

You are what’s called a multimodal learner. For instance, you might be reading this text while looking at pictures alongside. Or you might listen to a friend while observing their facial expressions. As a Homo sapiens, you intuitively know you can simultaneously leverage different stimuli to better grasp complex situations. Artificial intelligence could learn a thing or two from you

When it comes to AI, sensing technologies like computer vision, language processing, and audio recognition have led to extraordinary breakthroughs. But reducing input to any one data stream, as current systems do, prevents machines from nimbly navigating through our multifaceted world. Carl M. Vondrick, assistant professor of computer science, specializes in combining various sensory modalities to create more perceptive AI. In one set of experiments, he amassed a vast trove of epic fails from YouTube to teach machines the unpredictable nature of human behavior. In another ongoing project, he’s associating video with audio to facilitate machine translation of scarce languages. Most recently, he collaborated with Associate Professor Changxi Zheng on exploiting silence to create a deep learning model separating speech from background sounds.

Now Vondrick is making a lot of noise.

His latest endeavor, the Boombox, seeks to pair vision and sound in a new paradigm for situational awareness. “The problem with computer vision is that the camera’s always in the wrong place,” Vondrick says. “There’s always occlusions.”

So instead, his team (with mechanical engineer Professor Hod Lipson) rigged a small box with extremely sensitive microphones synchronized with a camera suspended above. Every time someone interacts with the box, the mics pick up distinct vibration patterns associated with each action. Their machine learning algorithm can then reverse engineer a visual scene (cross- checked by camera footage). Early experiments are achieving up to 86% accuracy.

Lifesaving applications aren’t hard to imagine. “I was thinking about how during COVID I’m living in a box,” Vondrick says, “and how this technology could be used to sense when someone has taken a bad fall.”

Deploying it residentially aligns seamlessly with an Internet of Things, in which refrigerators can sense expiring milk and smart speakers await our orders. Vondrick believes this approach could avoid some of the privacy concerns of full camera coverage. It could also aid in piloting robots through emergencies and other scenarios in which visibility is low and precision is paramount. But it hints at an even bigger vision.

“As humans, vision is our dominant sense,” he says. “But intelligence comes in many forms, and we may be overlooking smarter ways of teaching machines.”

Intelligence comes in many forms, and we may be overlooking smarter ways of teaching machines.