Think Like an Engineer

Using AI to invent stronger, more sustainable materials

Scientists have used artificial intelligence, particularly machine learning, to sift through their experimental data, searching for patterns that might reveal something new about the world.

But as the technology matures, AI is increasingly seen less as a smart spreadsheet and more as a creative co-pilot, helping researchers design experiments, collect data, and build data analysis tools. In the world of materials research, such computational collaborators could lead to wondrous new compounds. Even more wondrous: using them to train AI to think like an engineer.

One problem users of machine learning face is a lack of sufficient data with which to train the model. That’s especially true in scientific and engineering fields, where data is collected by run ning experiments and recording the results in notebooks. “Some- times people use electronic notebooks, but the notebooks don’t talk to each other,” says Simon Billinge, professor of materials science and applied physics and applied mathematics. “The data we need [to fashion smarter experiments] is not sitting in a database anywhere.” He wondered if he could rewire the whole process.

Simon Billinge, professor of materials science and applied physics and applied mathematics.

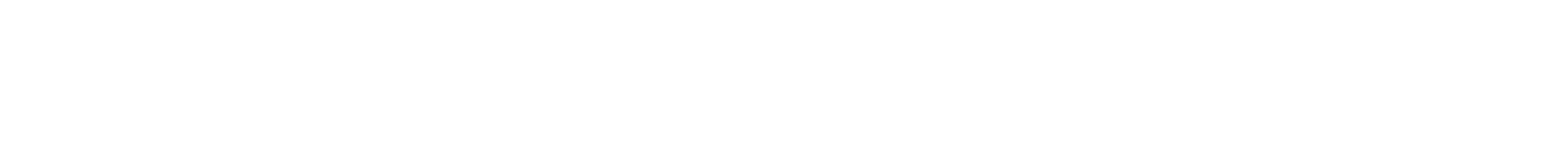

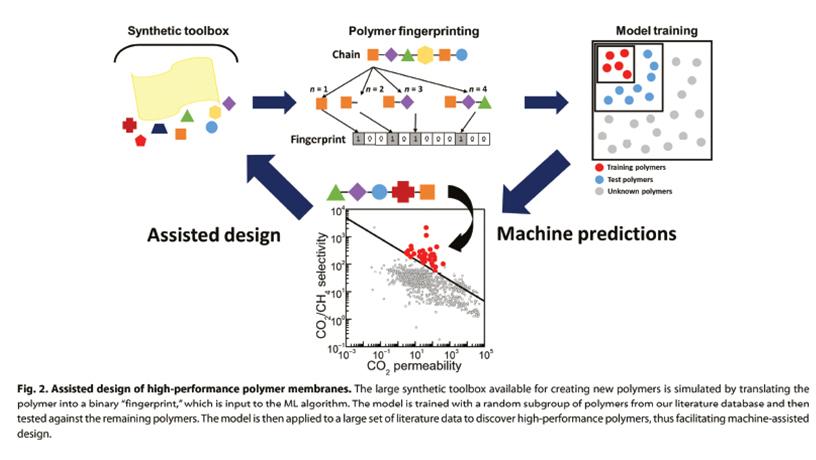

Schematic on integrating machine learning (ML) into materials discovery platform. ML helps determine correlation between characteristics (“fingerprints”) and properties. Once correlation is established, process is reframed into inverse problem moving from desired properties to fingerprints using standard tools of optimization.

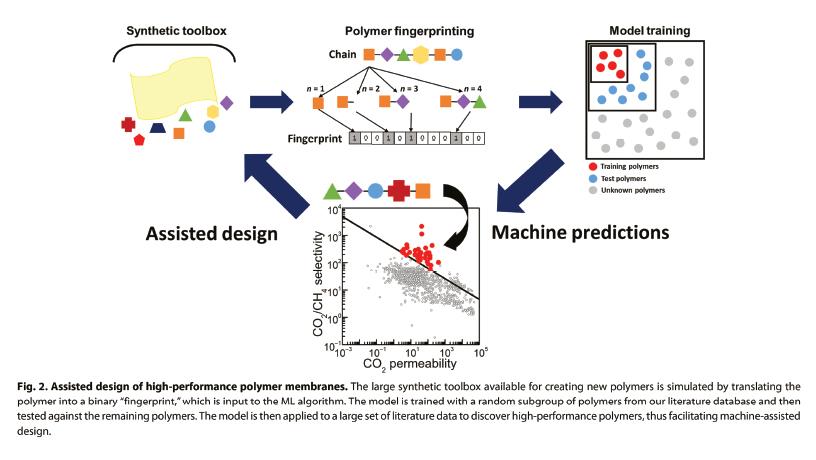

Schematic of experiment to make a target material run by an AI “creative co-pilot”. Moving counterclockwise: “knobs” on the apparatus controlling gas flow and temperature set by computer control. Subsequent reaction takes place in apparatus (left) mounted in an X-ray beam resulting in X-ray absorption spectrum (lower left) measured in real time. Software computes whether spectrum changes (bottom) are moving toward the target (lower right). AI agent uses information to determine new settings (top right), sets them (top), and cycle repeats. There is no human in the loop.

Billinge’s group, working with chemists at Stony Brook University and Oak Ridge National Laboratory, tackled that problem by training AI to speed up data collection. Billinge typically uses AI to determine the chemical structure of manufactured materials. In this proof of concept, he gave his AI an “inverse” problem: Instead of predicting an experiment’s results, he wanted to find a process to get those results—for instance, particular mixtures of copper and oxygen. Once he came up with a method to put that process on autopilot, his machine repeatedly tweaked its own “knobs”—altering temperature and gas ingredi ents—while measuring the results. It used each result to decide what to try next, a process called adaptive learning.

“We want the machine to develop a chemical intuition, and share its insights to help us fine-tune ours,” says Yevgeny Rakita Shlafstein, a postdoc research scientist in Billinge’s lab. This first demonstration was simple, but it made it into the prestigious Journal of the American Chemical Society, Billinge notes, “showing people that this is what their future looks like.”

“There’s a hunger for this,” says Sanat K. Kumar. “There’s this idea that the scientific method is great, but if there’s a viable shortcut, that could dramatically advance the science.”

Sanat K. Kumar, Bykhovsky Professor of Chemical Engineering.

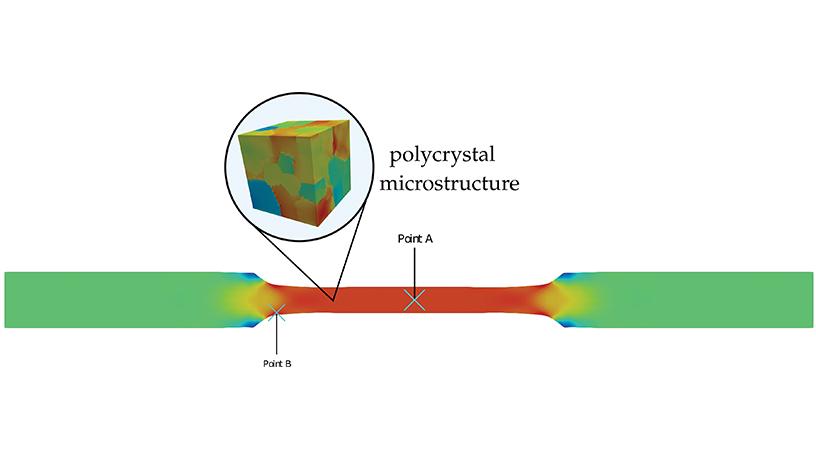

von Mises stress of polycrystal specimen predicted by neural networks. Figure reproduced from lassis Sun, CMAME, 0 1.

Kumar, professor of chemical engineering, develops thin plastic membranes that can, for instance, filter carbon dioxide out of smog at power plants. His team measures the properties of existing plastics and then uses machine learning to correlate which features of mate- rials give them certain properties.

That allows them to direct their attention to other plas tics people have made that might perform well too. “And then, are there others we can come up with to amplify these properties,” Kumar says, “so thus, in principle, I can use that information to create something even better than what exists.”

In one study, they trained an algorithm on a dataset containing the chemical structures and gas-filtering abilities of 700 plastics. Then they fed the trained software the chemical information of 11,000 untested plastics and asked it to predict their gas-filtering abilities. It found more than 100 promising candidates. They only manufactured and tested the top two choices, and indeed the computer’s predictions were accurate to within 5%, sidestepping the need to run hundreds of experiments.

Steve WaiChing Sun, associate professor of civil engineering and engineering mechanics.

Schematic on integrating machine learning (ML) into materials discovery platform. ML helps determine correlation between characteristics (“fingerprints”) and properties. Once correlation is established, process is reframed into inverse problem moving from desired properties to fingerprints using standard tools of optimization. (Courtesy of Sanat K. Kumar)

Sometimes engineers are trying to build new materials. Sometimes they are trying to understand why existing materials fail—especially when those materials are used to build airplanes and nuclear reactors.

Take polycrystals, the mélanges of crystal fragments that make up most such components, including steel, ceramics, rocks, and ices. Steve WaiChing Sun, associate professor of civil engineering and engineering mechanics, has created an AI-driven approach to predict, given a polycrystal’s structure and a particular deformation, how stress, damage, and fracture manifest inside it. While the traditional full simulation can take a few days to run, Sun’s trained neural network typically obtains nearly the same prediction within seconds.

We want the machine to develop a chemical intuition, and share its insights to help us fine-tune ours.

Sun doesn’t just use his AI to build better materials, he also uses it to design better AI. What’s the right type of neural network to use to approximate a simulation? Is another type of algorithm even better? Borrowing strategies from game theory, Sun’s AI can turn these questions into a puzzle for his designer AI. As the machine tries to boost its score, keyed to the resulting net’s ability to approximate simulations, it plays with different scenarios by, say, adding another layer to the neural net or using different types of neurons. In a related project, he uses multiple AIs to design experiments that generate training data for the net. By instructing those AIs to compete against each other, Sun estimates a Nash equilibrium for those AIs’ actions and uses them to probe for the weaknesses of a material model, challenging the net to eliminate those weaknesses. Within days, that data-generating AI could consider millions of scenarios that might take a scientist years or even decades to tackle manually.

“We don’t have to be limited by the human imagination,” he says.