When Words on Twitter Trigger Real-World Violence

Tweeting under the handle TyquanAssassin, Gakirah Barnes taunted and threatened rival gang members but sometimes expressed conflicting feelings of grief.

“The pain is unbearable,” she tweeted in 2014, after Chicago police allegedly shot and killed her best friend. Two weeks after typing those words, Barnes was gunned down by a rival gang member.

Just 17, she left behind an unusual legacy: more than 27,000 tweets, a modern equivalent of War and Peace tapped out in bursts of 140 characters or less. Starting the day she opened her Twitter account in 2011, and ending with her death, in April 2014, Barnes’s words give researchers a window on the emotions fueling gang violence in Chicago.

A team led by Desmond Patton (right), Kathleen McKeown, and Owen Rambow (not pictured) is building a tool that can identify Twitter posts that could lead to violence.

(Photo by Kim Martineau).

Patton was curious to see what more could be learned from Barnes’s Twitter feed. With the help of Chicago teenagers and social workers, he translated the lingo, and in February, published an analysis of her tweets.

Taking the work a step further, he recently teamed up with Columbia’s Data Science Institute to develop a violence-prevention tool that can detect highly emotional speech on Twitter.

A team led by Patton and computer scientists Kathleen McKeown and Owen Rambow has developed an algorithm that looks for grief- and aggression-themed posts social workers and violence-prevention workers can use to understand an underlying conflict and defuse it. McKeown, a pioneer in natural language processing, is Gertrude Rothschild Professor of Computer Science at Columbia Engineering and heads the Data Science Institute.

While gang violence has declined in New York and Los Angeles, it persists in Chicago’s poorest, most segregated neighborhoods. Barnes’s murder made international news, in part, because of her dual roles as victim and victimizer. The news photo of the gap-toothed girl in her graduation gown, handed to the media by her mother, stood in sharp contrast to the images of Gakirah Barnes brandishing her gun on YouTube.

To develop the algorithm, the team labeled a subset of Barnes’s tweets as “aggression,” “loss,” or “other,” and developed a program to tag the nouns, verbs, and other parts of speech. They also created a dictionary of Barnes’s vocabulary, including translations of the emojis she sprinkled throughout her writing. They used an “emotion” dictionary to rate words by how pleasant, intense, or concrete they were.

Training their algorithm on the labeled data, they developed a model to predict whether a post in a larger sample of Barnes’s tweets should be classified as aggression, loss, or other. They hope to next mine tweets Barnes exchanged with friends to see if they can develop a method for detecting underlying conversations. This would give prevention workers further context. “The people who intervene then know why the teenagers are upset or angry and how to defuse the situation,” said McKeown.

Eventually, they hope to set their algorithm loose on live Twitter and Facebook feeds and, potentially, other social media platforms. A Chicago-based nonprofit, Cure Violence, will take the lead on interpreting posts that are flagged and decide how to intervene.

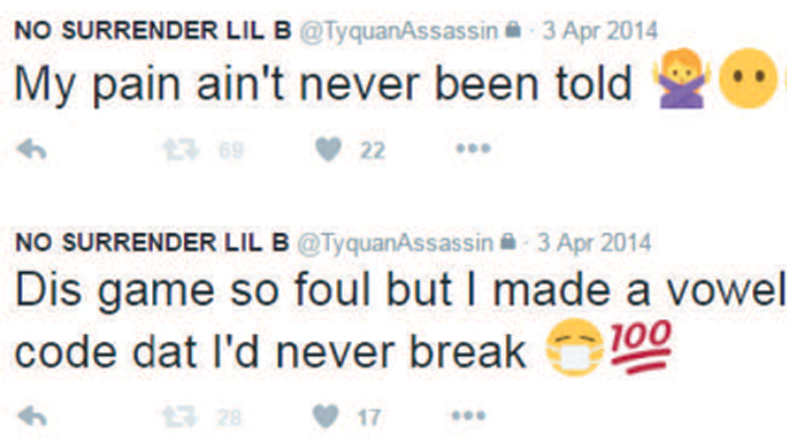

Researchers have developed an algorithm to recognize grief- and aggression-themed tweets. In the top tweet, Chicago gang member Gakirah Barnes mourns the loss of her best friend. Below it, she vows to never snitch. Facemask emojis are code for “no snitching” and “100” means she’s serious.

(Courtesy of Desmond Patton)