Ethics 2.0

A long-standing ethics curriculum expands for our data-driven age

Helio Fred Garcia tailors ethics courses for undergraduate and graduate students. (Photo by Tony Ning)

Hod Lipson (left) and Chad DeChant (right) focused on AI’s rapidly evolving capabilities. (Lipson photo by Timothy Lee Photographers/DeChant photo courtesy of Chad DeChant)

Chris Wiggins cocreated a course on how technologies transform the social and political order. (Photo by Barbara Alper)

Inside a packed lecture hall in Pupin, some 200 engineering students are settling in for an early semester session of Art of Engineering (AoE), the yearlong survey course required of all first-years.

Each Friday, AoE guest lecturers deliver insights from a different aspect of discipline. Some weeks, students learn about designing soft materials; other weeks, circuit analysis might be on the agenda. Today, they are debating matters of life and death.

“Early in your career, I’m here to get you to think about the difference between doing great good in the world and doing great harm in the world,” says Helio Fred Garcia, a faculty member from Columbia’s Professional Development and Leadership (PDL) program, as he opens the class. For the next 90 minutes, Garcia leads a lively discussion weighing tricky case studies, defining key concepts, and teasing out the nuances in competing definitions of right and wrong.

Such sessions form a typical pillar of Columbia Engineering’s wide-ranging ethics curriculum, which starts in a student’s first semester and runs through all levels of graduate study.

“Engineering is about improving lives, and ethics is inherent in that,” says PDL executive director Jenny Mak. “The world’s just getting more complicated, and as educators we have a responsibility to equip our students to contribute.”

In the case of PDL, a three-year-old program designed to refine essential professional and life skills, that means ethics is considered as fundamental as résumé writing and communication, and their courses are tailored for the global world of engineering. “We have a very diverse and international population, many with ambitions to enter complex global organizations. Part of what we do is provide a common vocabulary for students to make ethical and informed decisions,” Mak says.

Irving Herman, the Edwin Howard Armstrong Professor of Applied Physics, has been offering graduate-level ethics seminars since 2006. His course is now required of the department’s master’s and doctoral students, the latter of which must take it twice—once in their first year and again as a “booster shot” in their second. “The idea is to hammer this home,” he says.

For Herman, teaching ethics has a personal dimension. “You remember the Challenger disaster? One of those on board was a friend of mine from graduate school.”

That case study, in which engineers were pushed to okay a launch despite engineering concerns, is among the more than 250 examples he draws on in the classroom, examining issues from academic misconduct to faked data sets and workplace dynamics.

“Ethics touches everything we do. People need to be aware of when something is an issue, and to have given some thought to the issues, in order to be able to deal with it,” he says.

While engineering has never been without ethical dilemmas, with the rise of the surveillance economy, disinformation, and autonomous vehicles has come a particular skepticism about data-driven products and the moral compass of tech companies that produce them.

Increasingly, software engineers on campus also wanted to interrogate their societal impact.

“There were all these use cases. Students were concerned,” says Chris Wiggins, an associate professor of applied math. “They wanted to understand, ‘how do I know my culpability?’”

To delve into such big questions, Wiggins developed Data: Past, Present, and Future with Matthew Jones from the history department in 2017. Their course—which fulfills a nontechnical requirement for engineers and a technical requirement for students in the humanities—puts data into a larger cultural context, exploring how new technologies transform the social and political order.

Throughout the semester, students read up on the history of statistics and privacy and then turn to GitHub and Jupyter Notebooks to gain first-hand insight into how suspect statistics get generated.

“There are all kinds of ways to fool yourself if you’re not self-aware and self-critical,” Wiggins says. “The story of data is a story about truth and power.”

Though he originally created Data: Past, Present, and Future as a space for debating topics that often fall outside a typical machine learning class, Wiggins now imports thinking informed by the class into realms such as his applied math seminar, with the idea that engineering students can better understand their own agency.

“History is operationalizable,” he says, “by looking back at history and realizing not that things had to be that way, but in fact that there are a lot of things in our world and our worldview that are historical accidents or were greatly contested. And looking at things that were contested gives you a sense for how things could be in the future.”

When you learn math, you think it’s very easy to apply math. It’s actually very hard. Ethics is the same.

Augustin Chaintreau leads an effort to embed ethics across computer science’s undergraduate curriculum. (Photo by John Abbott)

“We provide a common vocabulary for students to make ethical and informed decisions,” says PDL head Jenny Mak. (Photo courtesy of Jenny Mak)

For a look at how regulation could shape the future of automation, computer science PhD candidate Chad DeChant worked with his adviser, Professor Hod Lipson, to create AI Safety, Ethics, and Policy. Lipson, the James and Sally Scapa Professor of Innovation in Mechanical Engineering and a member of the Data Science Institute, is an expert on smart machines, and DeChant proposed to him that they develop a 3000-level course where students could engage in robust discussions around AI capabilities that were grounded in realistic scenarios. That didn’t mean conversations didn’t sometimes veer into territory that sounded a bit otherworldly.

“We talked about ways to teach AI our ethical systems. One question that grew out of that was, ‘At what point do these systems become so sophisticated that we have to consider respecting them as ethical systems in their own right?’” DeChant says. “Some people think that could happen in our lifetimes.”

When it comes to the rapidly evolving field of AI—which in recent years has unleashed deepfakes and biased algorithms—“ we are completely out in uncharted territory,” says Lipson. “Regulation can’t keep up; the access to equipment and know-how is too easy. The only thing left is our ethics.”

Lipson says engineers shouldn’t hope to walk away from classes like this one with all the answers—rather with the critical thinking skills necessary to create an individual ethical framework that will evolve with the technology. But the intersection of AI and ethics is a two-way street, he believes, in which some level of technical literacy will become a must in any discipline. “Everyone should understand what AI can really do,” he says. Otherwise, society lacks the tools to engage in good-faith debates.

“I see a lot of knee-jerk reactions. But I can give you the pros and cons of every angle,” he says. “We have a project that trains drones to fly over cornfields, identifying and spraying only infected plants. Using this technology could dramatically decrease the use of pesticides while increasing yield. But bit for bit, it’s the same technology used to track people.”

DeChant, who has an undergraduate degree in philosophy and a masters in international relations, found such conundrums easier to explore in light of Columbia’s core curriculum. “Many of the students had already taken several philosophy courses,” he says. “It would have been very daunting to cover basic ethics in a class trying to cover so many elements and apply these concepts to computer science.”

In addition to surveillance, the AI course curriculum included economic impacts, human rights in an era of autonomous weapons, and the right to be forgotten. In their papers, students engaged in creative programming such as devising mechanisms by which corporations could compensate users for their data. In a room full of seniors, such assignments were particularly timely. “They’re all fielding job offers,” DeChant says. “It was a group of people just finishing one chapter and starting another in a reflective mood.”

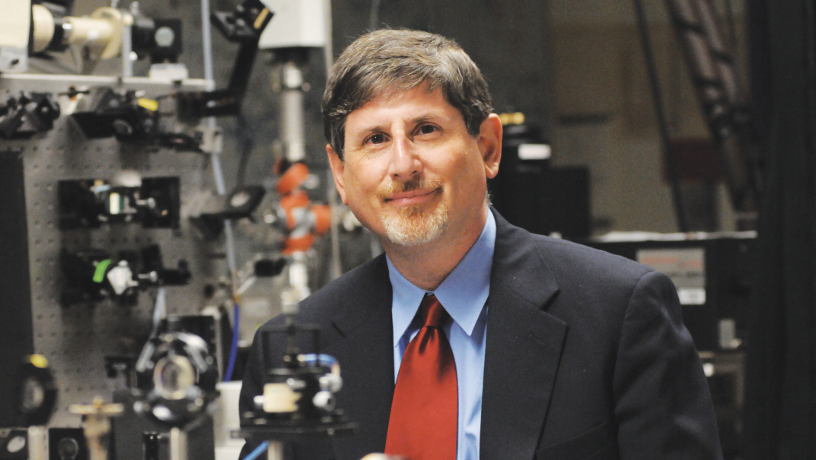

Irving Herman has been teaching ethics since 2007. (Photo by Eileen Barroso)

Stand-alone courses represent one arm of the School’s approach. Last semester, Associate Professor Augustin Chaintreau won a grant from the Responsible Computer Science Challenge to create a suite of course materials with the ambition to embed ethics across the department’s entire undergraduate curriculum. An initiative funded by Omidyar Network, Mozilla, Schmidt Futures, and Craig Newmark Philanthropies, the Challenge is spending $3.5 million to integrate ethics into computer science curricula nationwide, and the modules created at Columbia Engineering will be made freely available to other institutions. Here on campus, Chaintreau’s Challenge funds also support the hiring of new TAs drawn from psychology, anthropology, sociology, law, and journalism to ensure classroom conversations account for diverse perspectives. “When you learn math, you think it’s very easy to apply math,” Chaintreau says. “It’s actually very hard. Ethics is the same.”

Thus far, computer science faculty members Nakul Verma, Lydia Chilton, Carl Vondrick, Daniel Hsu, and Tony Dear have all joined in directly incorporating ethics in their coursework. Associate Professor Roxana Geambasu, Percy K. and Vida L. W. Hudson Professor of Computer Science Steve Bellovin, and Assistant Professor Suman Jana are among those with active, ongoing research projects.

In Chaintreau’s classroom, ethical implications are part and parcel of the coding. In one assignment, students studying social networks were tasked with creating an EgoNet using putatively anonymous data culled from the class. Once the technology is correctly implemented, students see just how easy it is to reveal behavioral traits of their specific peers. “You just learned something dangerous from a privacy standpoint,” he says.

Those kinds of lessons have a tendency to produce a profound ripple effect, he says. “Once you explain the ethical ramifications of something, they want to ask about other things,” he says. “What matters is the next generation of engineers joining industry will demand to find ways they can make ethical decisions.”

This ethics-first approach isn’t just about molding the hearts and minds of the next Mark Zuckerbergs. It’s also about serving the greater good by expanding the idea of who gets to be an engineer in the first place.

“Exploring these concepts helps those students who feel they are forgotten by computing. They wonder, ‘Is computer science welcoming to me?’” Chaintreau says. “It shows we’re aware of the issues, and that can be a source of reassurance— stay in the class. Stay in the discipline.”