Self-correcting Algorithms

Augustin Chaintreau (center) with students

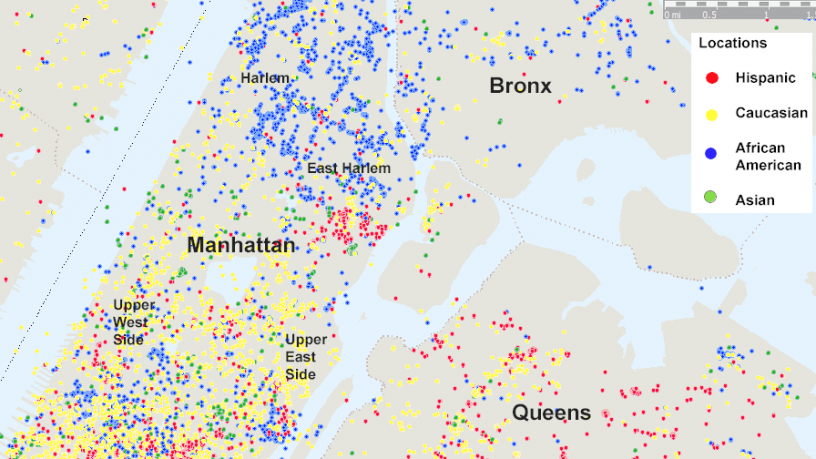

This map of Upper Manhattan denotes Instagram account holders by location, broken down by ethnicity as defined by US census.

Imagine a world where algorithms can improve and self-correct.

Social media recommendations can exert an outsize influence on what we see, hear, eat, buy, and even, increasingly, how we behave. So how can we make sure the algorithms that power these recommendations connect users with desired content without importing bias from the off-line world?

Augustin Chaintreau, associate professor of computer science, studies the effects of algorithms with the aim of making them more fair, efficient, and accountable—and the networks that connect us more reliable.

“Algorithms can have a certain effect in a particular context,” says Chaintreau. “The growth of a network can reinforce patterns that we don’t want to reinforce.”

That’s what Chaintreau recently found in his study on the unintended consequences of homophily—our tendency to gravitate toward people and ideas we find similar—in Instagram. His group determined that the platform’s recommender algorithms amplified the representation of men over women—even though the latter use the site more. Moreover, the study found that the algorithmic effect produced a greater imbalance than would have been exhibited by spontaneous growth of the social network.

Demonstrating evidence of bias was just a first step; Chaintreau is now exploring possible ways to address that bias without overcorrecting, with the ultimate goal of proving that more responsible algorithms are technically feasible and universally beneficial.

“We don’t want to be silencing voices,” he says.

Given the widespread use of social media, it’s not surprising that Chaintreau’s expertise has been sought after by industry—he often partners with companies looking to develop more equitable recommendation systems. But his commitment to fairness extends far beyond social media. For instance, at Columbia, Chaintreau has also worked with professors Roxana Geambasu and Daniel Hsu in computer science to develop Sunlight, a web transparency tool that reveals to users how ads target them.

“I want to help create a world where people can trust the decisions that are made by data,” Chaintreau says.