Engineering the Future of Cultural Preservation

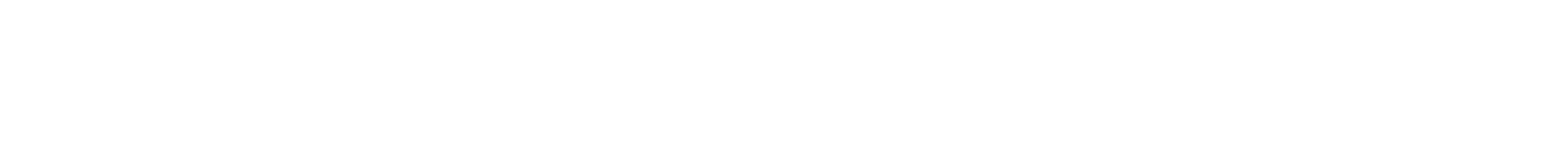

Partial model of Cathedral of Saint Pierre in Beauvais, France developed by Columbia University researchers merging multiple range scans.

This past spring, shock rippled out across the globe as news spread that France’s Notre Dame Cathedral had gone up in flames. A masterpiece of Gothic architecture, the church had stood for nearly eight centuries, a soaring testament to humanity’s technical ingenuity and the human spirit’s capacity for transcendence. Its near loss came as an acute reminder of how fragile are the objects that tell the deepest stories about who we are.

Fire may be a particularly dramatic example, but the cornerstones of our cultural heritage are constantly at risk. Precious artifacts fall prey to corrosion, vibration, and contamination, and then knowledge they contain is easily lost to history. Conserving them has always been as much science as art.

“Sometimes I’m hanging off of a bridge taking measurements, sometimes I’m applying the same technique to the Temple of Dendur,” says Andrew Smyth, an expert in structural vibrations who consults for art museums. “Once you understand how an object is constructed, you can understand its mechanics and begin to predict how it will respond to stress.”

In the popular imagination, technologists embody the future. But Smyth is among a number of engineering faculty also bringing a fresh perspective to some very old problems. Around the school, computer scientists work to decode historic construction techniques, materials scientists parse literary gems, nanoengineers plumb shipwrecks, and augmented reality researchers document in 3D old ways of life. Using the latest science, they’re unlocking ancient secrets before time runs out on our collective past.

Resurrecting Notre Dame

Before the embers cooled on the conflagration that engulfed Notre Dame, France’s leaders vowed it would rise from the ashes in just five years. Their announcement immediately sparked another debate: should the country reconstruct this medieval marvel or design a modern update? That jury’s still out, but any attempt to reconstitute France’s national treasure will have to grapple with a fundamental question—what was really there in the first place?

To fix something, you have to understand how it works; that’s a simple premise often belying a complicated fact, especially in the world of historic preservation. Take old buildings. Notre Dame may be a unique specimen—the high-tech skyscraper of its day—but like many historic structures, it wasn’t assembled according to a specified plan. Without such schematics, there’s no clear way to understand underlying mechanics, meaning restoration can be as much detective work as construction project. In one sense, Notre Dame was lucky; that groundwork had been laid years ago when researchers captured a precise 3D computer-generated replica of the building for posterity.

Completed in 2010, that model was the brainchild of art historian Andrew Tallon ’07, who, with the assistance of computer scientist Paul Blaer SEAS’08, used mirror-steered laser scans to conjure up a virtual blueprint accurate down to the centimeter, preserving an exact record reverse engineered from the stones themselves.

Recently architectural scans have emerged as a go-to tool for preservationists thanks to progress on two tracks: one being the scanners themselves, the other being efficient algorithms for first producing the scans and then stitching them together into a cohesive model. But the technology underpinning them is less than 20 years old, and it was aided by advances developed here on campus during the years Tallon studied at Columbia.

Back in 2001, computer science professor Peter Allen, later Blaer’s PhD advisor, and art history professor Stephen Murray, who advised Tallon’s PhD, hit upon a very big idea. At the time, Allen was building small 3D object models using lasers in his robotics lab. Murray wondered if Allen’s techniques could be applied on a grander scale, specifically as a means for the first ever scan of another majestic French cathedral, St. Peter of Beauvais. The fact that to date an edifice of that size and complexity had never been successfully virtually rendered didn’t faze anyone on the team. “We’re Columbia researchers and engineers,” says Allen. “We don’t say ‘no.’ We say, ‘we’ll figure out how to do that.’”

Scanning and Modeling the Cathedral of Saint Pierre, Beauvais, France

After months of preliminary experimentation, the group boarded a plane for Europe. Two weeks and 75 million data points later, they returned to Morningside Heights with the raw files for a model that could not only represent the building from every angle, but also peel away additions and adjustments layered over the original cathedral, to reveal its underlying structure.

The team had to run a gauntlet of technical hurdles to create it. No one had yet designed a system for efficiently piecing together the hundreds of individual 3D scans into one coherent model. Researchers, who at the time also included Ioannis Stamos ‘01, made significant progress by developing algorithms that automatically merged the scans more efficiently than a human could, improving the model’s accuracy right out the gate. To make the model photorealistic, they developed a novel method for automatically mapping disparate camera images onto their 3D model for a fully textured effect.

But first, they had to rethink the scanning process itself. Pondering the massive number of scans required by their model led to the invention of computational tools for reducing the number of scans. These tools were the product of Blaer’s dissertation on devising optimal algorithms for scanner placement using War of 1812-era forts located on New York’s Governors Island as a case study.

From a technical perspective, old buildings have much to offer computer science—their handmade design and hidden nooks and crannies serving as ideal testbeds for resolving glitches in computer vision, notes Blaer, who’s also a lecturer at Columbia Engineering. And computer science has been returning the favor.

“Paper degrades. Buildings can only fight gravity for so long. But we can construct and store such massive datasets now that we’re able to virtually preserve things for the long run,” Blaer says.

Shaking up the Art World

On a daily basis, humans pummel our bridges, roads, and buildings with millions of pounds of force, a fact that civil engineers must factor in when maintaining infrastructure designed to last for centuries. Inside an art gallery, those same forces—foot traffic, nearby construction, pulsing sound—can cause delicate objects to flake, crack, bend, or even “walk” off their shelves as vibrations repeatedly nudge them toward the edge. In the spring of 2012, New York’s famed Metropolitan Museum of Art was faced with just such a shaky proposition. The time had come to conduct a complete renovation on their Costume Institute. The only problem—the Costume Institute sits directly beneath the Met’s wildly popular Egyptian wing, 27 galleries housing 20,000 of some of the most fragile specimens on-site, including mummies, dried flowers, and ruins from the two-thousand-year-old Temple of Dendur. De-installing the exhibit and shipping it off to storage simply wasn’t an option. Instead they called Andrew Smyth.

A civil engineering professor with expertise in using sensor networks to monitor and avert mechanical failures, Smyth deployed a collection of accelerometer devices programed to automatically alert key personnel whenever construction crews hammering away below exceeded preset vibration thresholds. But the odds were already stacked in the Egyptian’s favor. Based on data obtained from a series of pilot tests, Smyth’s team pre-installed several stabilizing strategies, such as targeted introduction of dampening materials that reduced an object’s shaking by up to 80%. For particularly vulnerable galleries, they went a step further, incorporating spring-loaded granite pedestals tuned to oscillate at the same frequency as the floor. “That’s a very different way of solving the problem,” Smyth says. “You’re actually altering the way the building vibrates.”

Many institutions have since applied Smyth’s techniques in scenarios from rap concerts at member events to shipping items on loan, while a former student on the Met team (now a faculty member at Oxford University) translated their research into developing tools for stabilizing hospitals, transportation systems, and even nuclear waste during earthquakes.

Partial sensor placement during slab shots on a collaboration between Civil Engineering Professor Andrew Smyth and New York's Metropolitan Museum of Art.

Physics meets “bacteria poop”

In 1545, Henry VIII’s favorite warship—the Mary Rose—sank in the English Channel just off the coast of Portsmouth. In 1982, researchers located it, raised it and installed it in the eponymous museum where it’s now been viewed by more than 60 million people. Hauling the brittle remains of a 600-ton Tudor era warship up from beneath four stories of seawater was just the first set of engineering challenges facing conservators at the Mary Rose Trust, however. In 2014, materials expert Simon Billinge worked with colleagues at the Trust to identify a mysterious deterioration process which had begun threatening to transform this 500-year-old shipwreck into dust.

Billinge, whose day job as a professor includes advancing the physics behind cleaner energy and better medicine, has pioneered techniques for parsing the atomic structure of different materials. Billinge’s group cracked the Mary Rose case by imaging how x-rays scatter through sample cross sections at the smallest level—the researchers used that information to precisely characterize the nature of nano-scale materials hidden deep in the Tudor wood. Comparing the resulting images pixel by pixel allowed them to determine that over centuries the wood had become riddled with nanoparticles of zinc sulfide. Surfacing from the ocean floor’s anaerobic environment kickstarted an oxidizing process that transmuted that sulfide into zinc sulfate—and ultimately sulfuric acid that ate away at the hull. How this zinc alloy built up inside the cellulous wasn’t hard to deduce; it was clearly the byproduct of millions of microscopic sulfur-based organisms—bacteria poop in layman’s terms.

This discovery led to a new conservation method: application of strontium carbonate, which holds the promise to not only preserve the ship itself, but also organic materials among the 19,000 artifacts salvaged along with the wreckage. But “this isn’t just a big deal for conservators to understand,” Billinge notes. “It’s also a big deal for the study of bacteria ecology. These kinds of sulphur eating organisms are difficult to study and our map offers insights into how they self-organize in colonies and what they feed on.”

Taking a core sample from the hull of the Mary Rose, a 16th century warship raised from the floor of the English Channel in 1982.

Billinge’s group cracked the Mary Rose case by imaging how x-rays scatter through sample cross sections in order to precisely characterize the nature of nano-scale materials hidden deep in the Tudor wood.

Disappearing Ink

Blue hand-painted initial causing deterioration of paper. Bartolomaeus Anglicus. De proprietatibus rerum. Nuremburg: Anton Koberger, 1492. Archives and Special Collections, Augustus C. Long Health Sciences Library, Irving Medical Center, Columbia University (AE2 .B37 1492 Q ) detail, f.1.

Blue hand-painted initial causing deterioration of paper. Bartolomaeus Anglicus. De proprietatibus rerum. Nuremburg: Anton Koberger, 1492. Archives and Special Collections, Augustus C. Long Health Sciences Library, Irving Medical Center, Columbia University (AE2 .B37 1492 Q ) detail, f.1.

It was a master’s student continuing on from his undergraduate studies in the materials science and engineering program of the applied physics and applied math department who brought materials expert Katayun Barmak together with Alexis Hagadorn and Emily Lynch from Columbia University Libraries’ Conservation Lab. In designing his master’s research project, the student—Michael Berkson—had hoped to find a way to combine his research in materials science with his interest in art conservation, which had been sparked by a talk he heard about the work in Columbia’s Ancient Ink Laboratory. This lab, of which Hagadorn was a member, at the time was an offshoot of the university’s Nano Initiative run by electrical engineer James Yardley. Yardley’s lab used nanotechnology to elucidate the material properties of inks painted millennia ago, many of which were composed of unknown ingredients. Using spectroscopic signatures—which register the way a material reflects light—the Ancient Inks group could also determine the age of a given manuscript.

Berkson’s timing couldn’t have been better. Hagadorn, current head of Columbia’s conservation department, had identified the cause of a peculiar type of degradation manifesting in one of the library’s rare 15th century encyclopedias. However, her research had raised further questions. Created at the dawn of the printing press, this volume contained a mix of mechanically produced text and hand painted blue and red initials. Oddly, only the latter were turning the paper beneath them brown before eating away at it. Hagadorn intuited that the blue ink involved—a common pigment derived from copper that pops up in everything from European illuminated manuscripts to Far Eastern scroll paintings—was somehow to blame. But since this ink is known for being extremely stable over long periods and varying conditions, a heretofore unknown cause must be at work. Having determined that a component in the blue ink was interacting with the original artist’s surface preparation of the paper, a previously unrecorded phenomenon, she set out to determine what mechanisms were at fault.

“I thought, I’d love to explore that, but I definitely need a scientist to help me,” she says. Luckily, at Columbia, where the arts and sciences are considered two sides of the same coin, such cross-disciplinary collaborations are easy to come by.

“Materials scientists are interested in conservation because they have a way of thinking they can bring to the problem,” says Barmak from her art-filled office on the 11th floor of Mudd where she’s gathered with Hagadorn and Lynch. This manuscript degradation, she says “would be equivalent to the corrosion of metals causing your car to rust. It’s very much in the vein of materials science paradigm.”

Materials scientists are interested in conservation because they have a way of thinking they can bring to the problem.

Once Barmak signed on, the project quickly became a master class in experimental design. Together with her students—since the project’s inception, six in all have taken it up—she first had to craft a set of tests capable of narrowing down a multitude of parameters (such as ink recipes and base materials), all the while controlling for humidity, pH, temperature and a myriad of other factors. To do that, Barmak drew on her background in R&D at IBM to make use of a factorial design approach, in which multiple parameters are varied at a time to determine not only the most important parameters but also interactions between parameters. Barmak also drew on connections across the engineering school to access sophisticated microscopy and other state-of-the-art equipment. But in many cases, the group had to develop suitable protocols to ensure repeatability and minimize sample-to-sample variation. Being a small field, few tools are purpose-built for conservation and the team spent hours just brainstorming ways to ensure consistent application of the ink. “You’re imitating something that really wasn’t standardized,” Hagadorn says. “So, for instance, how uniform the paint application was wasn’t the concern of the creator. But it has to be one of ours.” There, Lynch contributed a bit of inspired improvisation. “I found these plastic spatulas used in the cosmetics industry,” she says. “They had the perfect width, so we just dragged them across in one swoop.”

Thus far, their work identified exposure to elevated temperatures as one of the parameters, though not the only one. Ultimately, the group believes the degradation factors they’re investigating, which they fully intend to publish, could have wide ramifications for the conservation field, considering how ubiquitous the ink they studied is.

“I’ve worked on technologies that 30 years later have not yet hit the market,” says Barmak. “But here, you’re immediately connecting to the past and you’re preserving it for the future. To have played even a small part in protecting these beautiful books is very satisfying.”

Augmenting Reality

When historian Pamela Smith first set out to produce scholarly work on a previously unknown 16th-century French manuscript, she knew the end result would live in a digital format; she just imagined it would be a fairly conventional one. Then she met computer scientist Steve Feiner, and began to imagine how augmented reality (AR) could turn that idea on its head.

“Once I talked to Steve, I realized how limited my conception of representing the manuscript for publication was,” she says. “He made me rethink what a ‘book’ could be.”

Smith’s plan already involved an ambitious program to co-create an open source, open access, open ended digital environment for users to manipulate and contribute to a translated, annotated, and deconstructed version of the text. Deploying such inventive strategies for preserving and advancing knowledge at the intersection of art, history and science has long been a focus for the professor. As founder of the Making and Knowing Project, Smith is Columbia’s only humanities faculty currently running a chemistry lab, where students across the disciplines can gather to investigate historical materials, techniques, and technologies.

Life cast beetles created by the Making and Knowing Project following recipes found in a 16th century artisan's manuscript.

With Feiner’s input came the opportunity to layer on a whole other dimension. The 16th-century manuscript currently at the heart of their project, an early artisan’s how-to on craft techniques to produce objects and materials in various media, immediately struck a chord with the computer science professor, whose own lab has been redefining what’s possible in AR for decades. “In my work I’m particularly interested in developing new tools to assist people in performing skilled tasks in a wide range of domains,” he says.

For historians, documenting bygone processes is as critical as chronicling artifacts themselves. So, Feiner’s group created an AR app that allows Making and Knowing researchers to spatiotemporally record phases of their experiments by overlaying artifacts with 3D photos, videos, and notes captured in the lab in real time. “We wanted to make their process richer,” he says. Data from that tool will come online when Smith’s group launches the first phase of their website this January; the group plans to roll out a more robust version that includes curricula, templates, case studies and additional pedagogical materials in subsequent months. In the meantime, Feiner’s group is also at work creating a 3D AR model of Smith’s lab that will allow users around the globe to step inside and explore.

However visitors engage with her lab, Smith hopes they’ll come away with a new appreciation for older ways of learning by doing. “There’s a really important aspect of human knowledge that we don’t give much attention to these days, and that’s hands-on knowledge,” she says. “Hands-on knowledge is part of how we learn innovation and improvisational creativity.”

For us humans, that creative essence has long united our seemingly disparate endeavors.

“We’ve come to think of science and art as two realms that are very far apart,” she says. “But art in the 16th century was about investigating nature in order to make objects. We’re entering a period when both worlds are coming back together.”

An early prototype of a smartphone AR tool that allows digital historians to create spatiotemporal documentation.